Why Generic AI Models Fail — and Why This Is Especially Dangerous in the Legal Domain (Watson.ch)

Based on a recent Swiss analysis (watson.ch)

https://www.watson.ch/!669651522

A new analysis from Switzerland shows clearly: Many AI models draw their knowledge from opaque and sometimes questionable sources. Users cannot tell where statements come from — or whether they are even correct. The investigation highlights several core problems that are particularly relevant for legal applications:

1. AI invents sources or uses unreliable data

The tested models delivered answers based on false, fabricated, or poorly traceable sources.

👉 In the legal world, this would be fatal: one wrong source = one wrong legal answer = a potential liability case.

2. Models contradict themselves

Depending on the phrasing of the question, AI systems produced different and contradictory answers.

👉 In law, this creates complete uncertainty — a ruling or statute does not change depending on wording.

3. Lack of transparency: users cannot know what is true

The analysis shows that users have no way to verify the origin or quality of the answer.

👉 This is the biggest risk: without clear references, no one can determine whether the legal conclusion is valid.

4. With more complex questions, the AI “hallucinates”

As questions became more complex, the models began inventing facts or oversimplifying.

👉 Yet complex cases are precisely where legal accuracy is critical — an invented answer can lead to costly mistakes.

What does this mean for legal work?

This analysis demonstrates why general-purpose AI is unsuitable for legal questions:

❌ No reliable sources

❌ No guarantee of up-to-date information

❌ No transparency

❌ High error and hallucination rates

In law, “almost correct” is not enough. It must be correct.

Why Lawise / Jurilo is different

✓ Legally verified answers based on Swiss laws, commentaries, and Federal Supreme Court rulings

✓ 0% hallucinations — every answer is grounded in real legal sources

✓ Transparent references — always traceable and verifiable

✓ A specialised model instead of a black-box chatbot

While general AI models often provide entertaining or approximate responses, Lawise delivers correct answers — and in law, that is what counts.

Conclusion

The Swiss analysis shows that even for simple everyday questions, AI models deliver inaccurate or unclear information.

In legal practice, this would be irresponsible.

👉 This is why we need specialised, verified Legal-AI — like Lawise — built on real legal sources and designed for maximum reliability.

Reference to the Watson article:

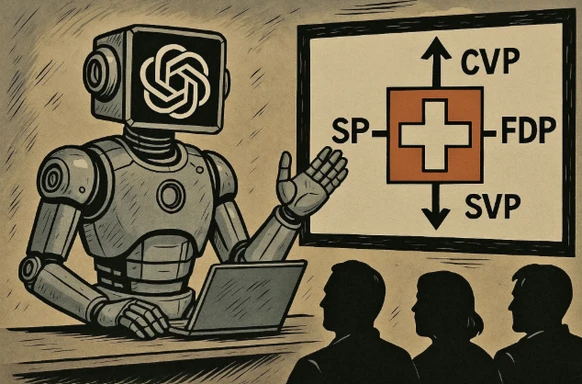

When we ask ChatGPT, the AI also explains complex topics in Swiss politics. But its research is not always broadly supported or balanced.

Image: KI-generiert/ChatGPT/bev